An article in which an ML developer discusses everything about “chatbot”: what they’re for, how they’re created and how to teach them to communicate on random topics. He also explains why not all bots are actually smart.

Operational business analysis, customer support and psychological assistance. What are chatbots able to talk about anything for?

Aleksandr Konstantinovsky is a senior developer at the MTS AI Machine-Learning Department. He’s in charge of creating chatbots that are not only capable of helping the user resolve their particular issue, but also of just keeping the conversation going. Developers call these bots “chatters.” The ability to talk about anything is a human prerogative; soon, however, AI will learn to talk in a way that makes it impossible to distinguish one from the other. What’s important to consider when creating a chatbot for communication, and how to know when the result is great? We discussed it with Aleksandr.

Who are chatbots?

Chatbots are computer programs, but they’re distinctive in terms of how they interact with the user: you can talk to a bot like a real person, that is – in an understandable conversational language. Today, chatbots can be considered the “face” of business, because customers communicate with them in the same way as with a customer support specialist – via a messenger. Chatbots are often ML-based, which helps them perform complex tasks and solve them more accurately. Chatbots can be placed on any platform that allows for user interaction – both in the familiar messenger and on the company website.

For example, a bot can understand when a user says something rude. This task can be resolved with or without machine learning. It can be accomplished without ML by making a list of curse words. The bot will only understand that the customer is being rude when a word from this list is present in the user’s speech. In the case of using ML algorithms, on the other hand, the chatbot will be able to read between the lines to capture the meaning of what the person is saying – even if they’re not using profanity in their speech.

What are the criteria for classifying chatbots?

There are two main groups of chatbots: task-oriented and non-task-oriented bots. The first class is very broad: it includes working with different types of tasks, ranging from analyzing the tone of communication to booking tables at restaurants. Chatbots from the second group are multimodal systems capable of solving several different user requests. The most striking example of this is smart assistants, who turn on the lights, put on the music and support dialogue on random topics.

First and foremost, chatbots are designed to alleviate the workload of people – call center operators, moderators, administrators and other professionals who work with repetitive tasks. Bots make it possible to avoid wasting precious human time on routine matters and to deal instead with more creative and complex tasks that AI can’t handle yet. Moreover, a bot can work 24/7 and its development, implementation and support don’t require serious investments. Chatbots can also collect data and focus on prospects. For example, a bot can communicate with a person and simultaneously save information about the user to improve interaction with them in the future.

A chatbot for any purpose

A bot is capable of coping with almost any task that a person regularly solves on the network. It can rid a user of the Outlook interface and forward all mail to the Telegram chat responsible for handling its processing, according to a particular algorithm. The same can be done with the weather forecast, table reservations, car services. In theory, there are virtually no constraints on its application.

During his student days, Aleksandr worked with a customer who wanted to get a better understanding of what his employees were doing during their working hours. This task was accomplished with the help of a Telegram bot, which tracked the location of subordinates and requested photos of workplaces. Employees sent information to the bot, it counted the working hours and the administration controlled all of it.

A chatbot can become a pocket analyst for an executive. To do this, the bot needs access to different systems that it uses to collect and analyze data and then provides the person with pre-processed information. AI-based functionality can be integrated into a chatbot so that a person can query connected databases without using a programming language. The bot will translate it into “machine” language, make its request and provide an answer. Such solutions haven’t become widespread yet – but it’s only a matter of time before they do.

Chatbots are versatile assistants. They can be used instead of heavyweight analytical systems or apps to automate business processes. It’s convenient both from the standpoint of usability, as well as from the cost savings perspective. The business only needs to pay for the work of one developer and bot hosting, so that it “lives” on the server. According to Aleksandr, many companies could get by with one chatbot instead of high-load automated control systems.

The art of small talk

Communicating on a random topic is a skill known as small talk – a skill that only humans have thus far possessed. However, there has long been a demand in the world for the possibility of simple communication with a machine. A user may want more from their smartphone than just making calls or going online –emotional closeness, for example. A chatbot capable of conversing on philosophical topics, discussing music or cinema with a person is much more inviting.

Why might a business need these skills? If we view the art of communicating on random topics as a separate skill, then a chatbot capable of chatting with a person can attract new users. This, in turn, helps facilitate its market promotion. Remember how the Alisa virtual assistant gave funny answers at first? They went viral, turned into memes and people wanted to try chatting with her for themselves.

The task of developing a chatbot capable of communicating on free topics is very nuanced. To perform it seamlessly, you need qualified experts, a budget, powerful hardware, and most importantly – a lot of data. Often, only the biggest players have such a set.

Many well-known solutions use deep neural networks that are trained on vast arrays of data. The more data, the better the neural network functions – there can’t be a pre-set limitation on the scope of data guaranteeing the quality of the system’s operation. Working with data when creating an AI model is the main – and most difficult – part of the task. It presents its challenges: the low quality of data to which there is open access, the small number of datasets in the Russian language, and the lack of regulation in this area.

There are three levels of complexity when creating chatbots capable of speaking different languages. The easiest is when the bot speaks English – you can find a lot of data to create it. Medium: when there is less data, but still enough, for example – for Russian or Chinese. The level of complexity involved in creating a chatbot in these languages is comparable – they are not considered international, and few people understand them. The highest level of complexity is when there is either very little or no data at all: for example, if the bot must speak Hebrew.

How is a chatbot created?

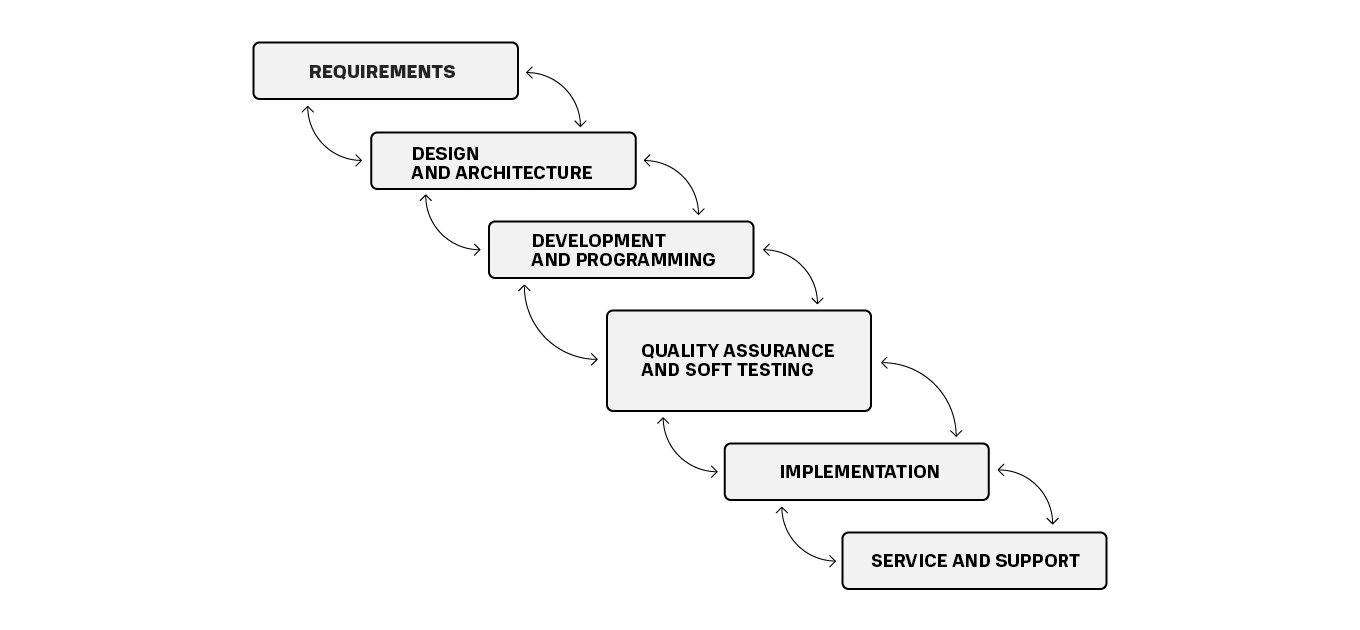

The process of creating a chatbot is a long story – from generating the technical task to rolling it out into production. Managers usually set the goals and deadlines, while you handle strict module-by-module development and version control. Sometimes it goes differently: a product can start with an educational task for an intern or a junior specialist who makes the chatbot, and if management likes it – the project evolves into a commercial solution. Generally speaking, the process of creating a chatbot resembles the standard software lifecycle. It encompasses requirement development, design, solution implementation, testing, operation – then all of these stages must be repeated.

The number of iterations that must be completed to train a neural network depends on task complexity, the specific model and the scope of data involved. The size of the neural network directly affects its ability to operate in a more complex way – and to remember. The larger the neural network, the more data it needs to train, and the more time it takes to train. For example, a small neural network that is supposed to distinguish cats from dogs can be quickly trained on a home computer. But Google’s AlphaGo neural network, which defeated the world champion in Go, was trained on a supercomputer for several weeks.

When do you need ongoing support for a working solution? If the product needs to be improved and refined, then it’s necessary to continue collecting data and retraining the system. Nowadays, the market is moving towards automating this process. If the model works well and solves the task based on the primary dataset, then you may not need to spend resources on its ongoing support.

Level of communicative humanity. How do you know if a chatbot is of high quality?

The choice of metrics for assessing the quality of a chatbot depends on formulating the task. Speaking of chatterbots – both automatic criteria and those requiring manual work are used in this regard.

For many years, scientists have been trying to develop an automatic metric indicating the level of communicative adequacy or humanity: the text that the chatbot produces is assessed. The most popular metric is called “perplexity”, which can be viewed as an intermediate assessment. Perplexity reflects the bot’s inability to understand a person’s request. The lower the indicator, the better the chatbot communicates. Engineers monitor the perplexity indicator throughout the entire training session, and after training several different neural networks, they choose the best one for this indicator. The next stage is a live test using the second category metrics, with people needed to perform the assessment.

First test option: people are given two different versions of the chatbot to talk to and they decide which version is better. This assessment is relative and is suitable for pairwise comparisons.

The second option using absolute assessment criteria: a person communicates with the bot and assesses two indicators – sensibleness and specificity. People check whether the bot’s responses make sense in context – whether or not the bot is speaking gibberish, and how original and interesting its responses are. The average of the two indicators is the final absolute score by which the chatterbots can be compared.

For task-oriented bots, the assessment criteria will be different. Whereas the chatter bot’s task is to keep the conversation going, a bot focused on a specific task is responsible for solving a specific user problem. When the bot’s task is to provide information at the user’s request, we might not know for sure whether the bot hit the target – whether it provided exactly the information required. To understand whether a bot has successfully resolved a task, users are often asked to enter a rating or emoticon at the end of their interaction with the system. This makes it possible to develop accurate metrics: for example – to calculate the percentage of users that the bot has helped. This approach also helps analyze which queries are resolved less or more frequently and adjust the system’s operation based on this data.

Chat as you would with your best friend

A great example of chatbots that can talk to a user about anything is Replika AI. Its creators claim that their chatbot helps millions of people psychologically, supports them emotionally and that thanks to such communication – people stop feeling lonely. Amazingly, some people do communicate with the involved machine, since their dialogue is the result of matrix multiplication.

Why aren’t all bots smart?

Machine learning is never 100% accurate. Its nature is such that although we try to approximate them as closely as possible, we cannot completely meet the preferences of the person. The quality of many bots is reduced by censorship and the fear of harming one’s image. The classifier of forbidden topics strongly affects the work of chatbots capable of talking about random topics – it limits them.

How does censoring work? At the data collection stage, they are purged of the part of the dataset that people could find offensive. Once the system has been trained, it should be checked again: after the chatbot has “thought up” the response but before sending it to the user, the response must be evaluated for possible obscenity. Unfortunately, current “censoring” is imperfect and reduces the quality of the main module, which can affect the chatbot’s overall operation.

Chatbots aren’t uncommon today. They help people deal with payments for communications and the Internet, explain how to book a car for servicing, and resolve issues involving the customer servicing of online stores, banks, and other organizations. Increasingly, users expect such systems to show humanity and emotionality towards them. This is where the skill of making small talk on any topic comes to the rescue. However, the potential of chatbots and other virtual assistants hasn’t been fully explored yet; over time, the range of their application in business and personal affairs will only grow and the technology for creating chatbots will continue to improve.